Reduce LLM Serving Costs by up to 65%

Accelerate AI deployment and inference while optimizing hardware infrastructure

Try NowCompanies that trust us

Slash LLM deployment time from weeks to minutes

Preview performance, right-size resources and automatically apply optimizations in a single click with CentML

Peak Performance, Maximum Flexibility

Deploy on any cloud or VPC. Abstract away configuration complexity. Get the latest hardware at the best pricing without contract lock-in.

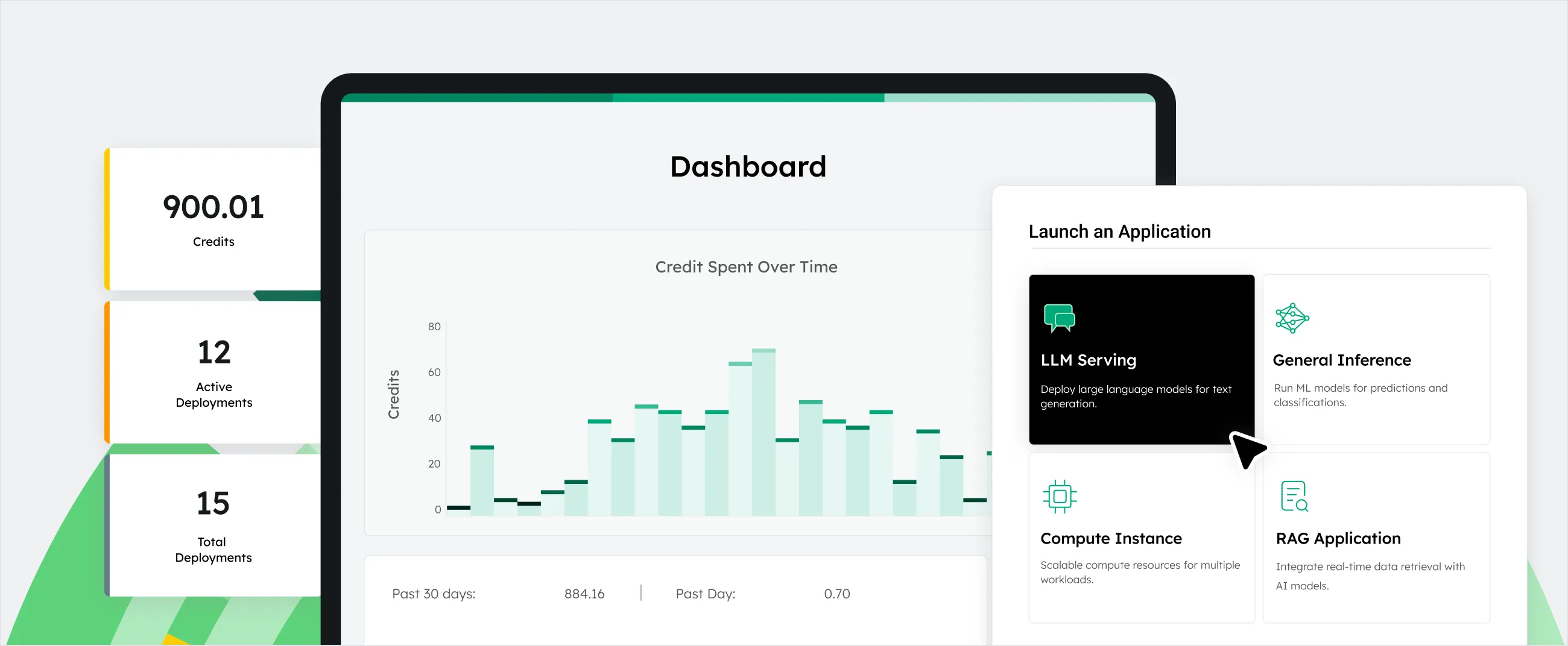

Our solution

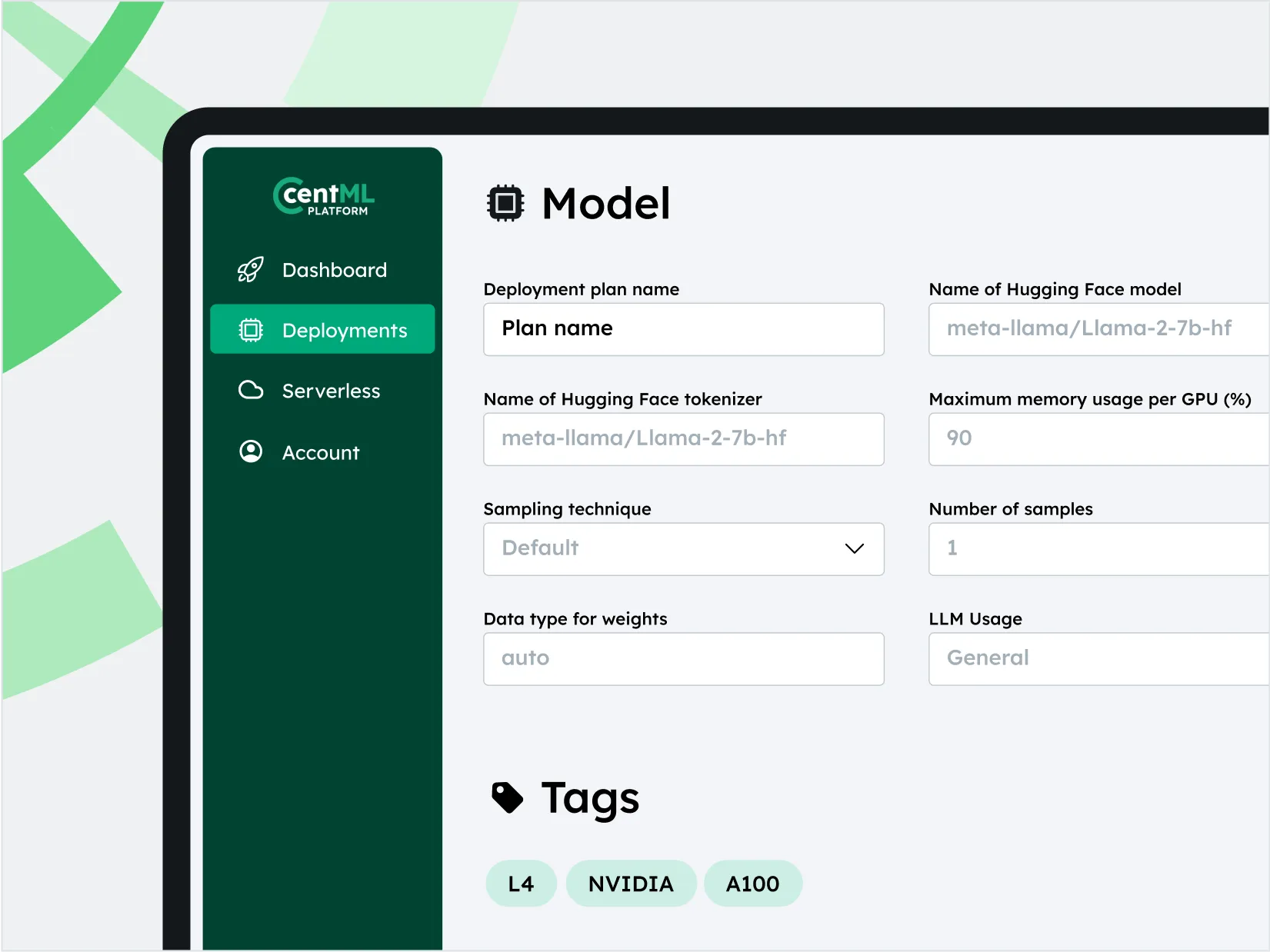

Take the guesswork out of LLM deployment.

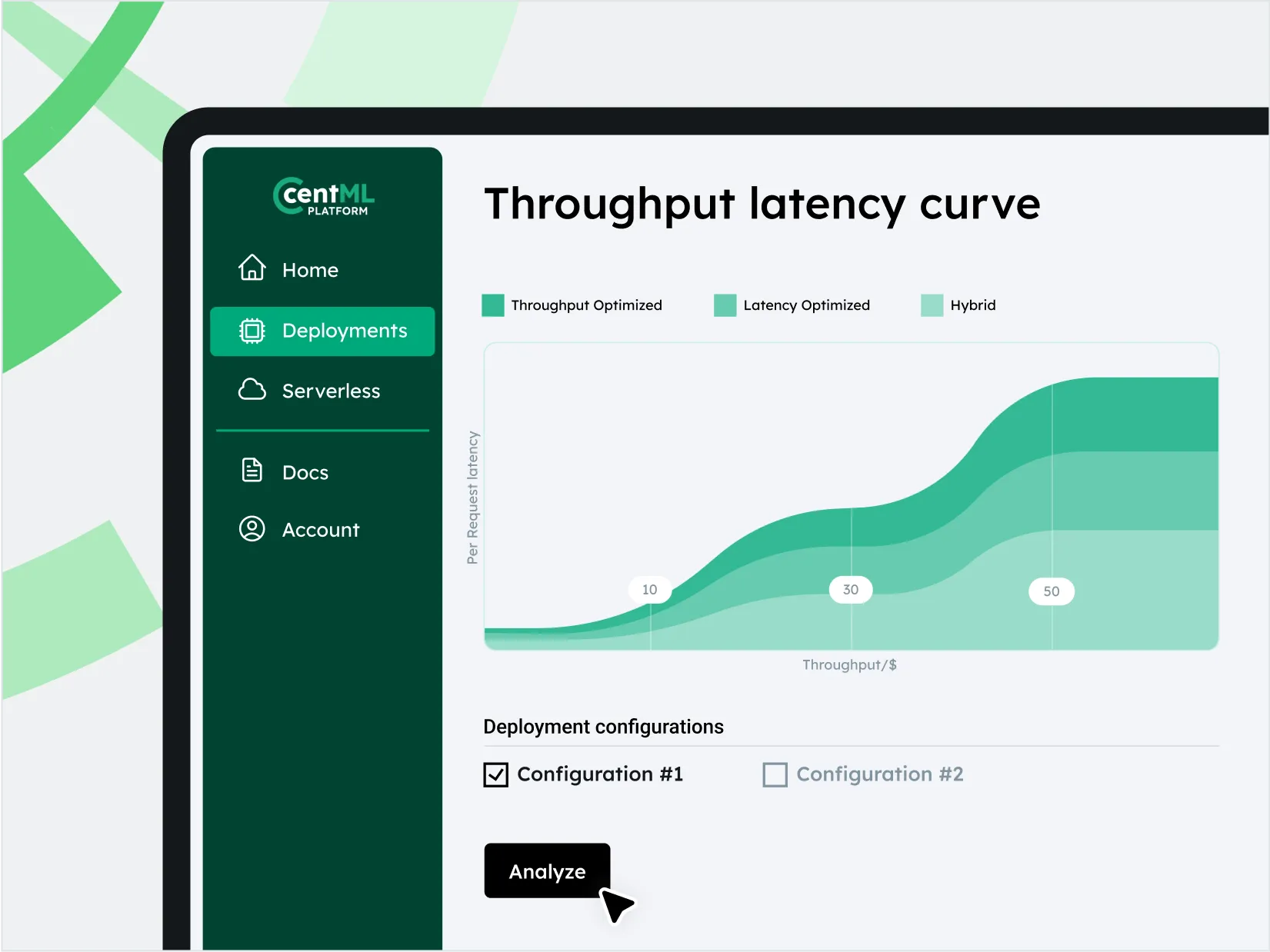

Advanced System Optimization

Save costs with more efficient hardware utilization.

Right-size hardware usage with cutting-edge memory management techniques.

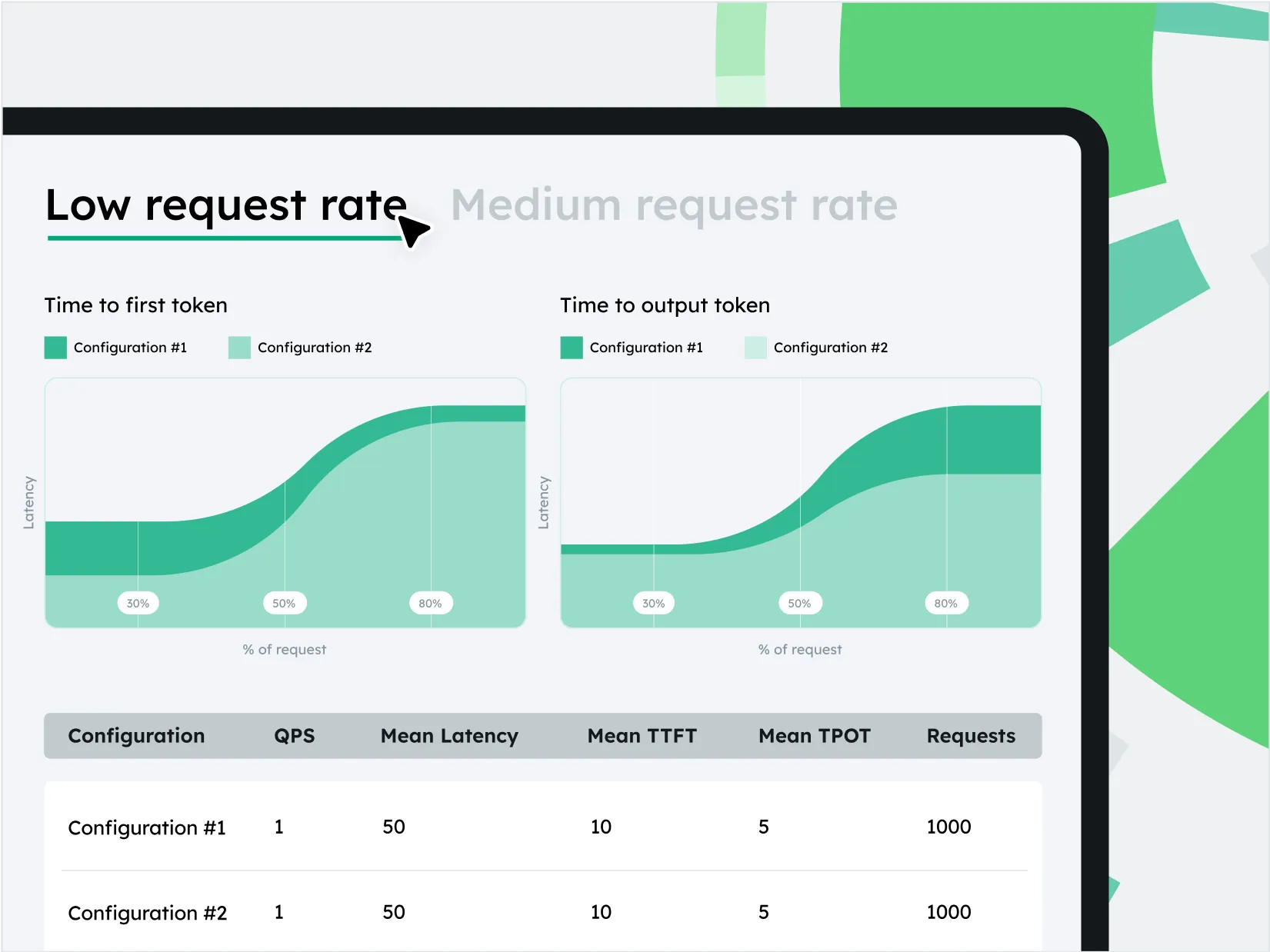

Deployment Planning and Serving at Scale

Streamline LLM deployment with single-click resource sizing and model serving.

Boost performance with reduced latency and maximized throughput at scale.

Diverse Hardware, Model, and Modality Support

Day 1 support for popular open source LLMs to unlock your agentic use cases.

Enterprise-grade execution engine supports multiple backends and compute.

Case Studies

-

Maximizing LLM training and inference efficiency using CentML on OCI

In partnership with CentML, Oracle has developed innovative solutions to meet the growing demand for high-performance NVIDIA GPUs for machine learning (ML) model training and inference.

Maximizing LLM training and inference efficiency using CentML on OCI

- 48%

- improvement on LLaMA inference serving performance

- 1.2x

- increase in performance on NVIDIA A100

-

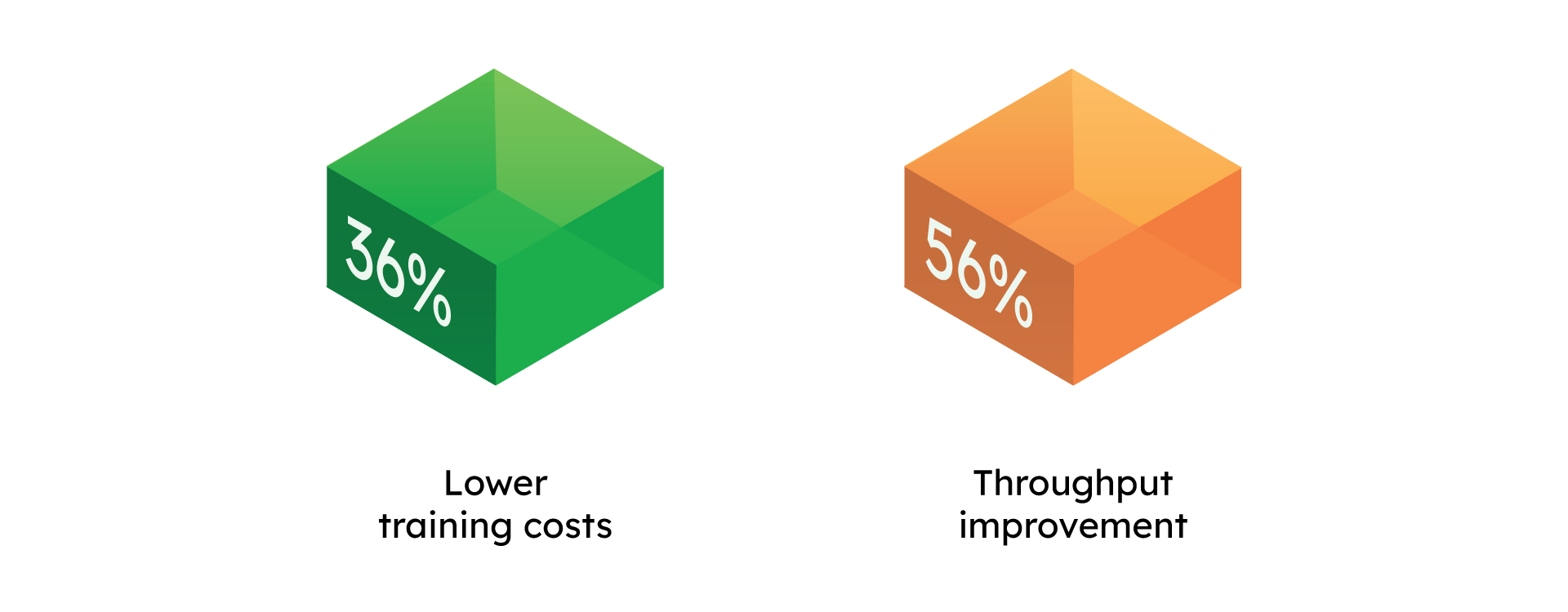

GenAI company cuts training costs by 36% with CentML

A growing generative AI company partnered with CentML to accelerate their API-as-a-service and iterate with foundational models.

GenAI company cuts training costs by 36% with CentML

- 36%

- lower training costs

- 56%

- increase in performance on NVIDIA A100

Ecosystem Support

Testimonials

Get started with CentML

Ready to simplify your LLM deployment and accelerate your AI initiatives? Let's talk.

Book a Demo