Building Modern GenAI: How We Packaged CServe as a Snowflake Native App

Learn how CentML packaged CServe as a Snowflake Native App and how to deploy your applications on Snowpark Container Services.

Table of Contents

Published: Oct 21, 2024

A few weeks ago, we announced the availability of CentML CServe on Snowflake Marketplace. Below, we take you through the journey of packaging CServe as a Native App for Snowpark Container Services.

First, the Basics: What is CServe?

CServe is an efficient LLM serving engine. Leveraging advanced techniques like model offloading, GPU sharding, and speculative decoding, CServe ensures that large language models (LLMs) can reach peak performance.

Such optimizations allow for more efficient real-time and batch processing, regardless of the system configuration.

Building Modern GenAI Systems

When Snowflake announced the General Availability of GPU compute instances on SPCS, it introduced the last missing pillar for building modern GenAI systems:

Combined with data and open-source foundational models with generous licenses, this accelerated compute meant we had all the necessary tools to bring GenAI to enterprise customers.

Snowflake Technologies We Leveraged

- Snowpark Container Services: Enables containerized applications to run on Snowflake’s managed infrastructure, leveraging GPUs for AI workloads.

- GPU Compute Instances: Snowflake now offers GPU acceleration, allowing applications like CServe to handle resource-intensive AI tasks like real-time LLM inference, with unmatched efficiency.

- Native App Framework: Facilitates easy integration of external applications like CServe into the Snowflake ecosystem, ensuring seamless operation and management through SQL commands.

- Data Marketplace: Allows CServe users to access and analyze vast amounts of data directly from Snowflake’s marketplace, unlocking new AI-driven insights.

- Image Repository: Stores and manages Docker images for containerized applications, streamlining the deployment process on SPCS.

Use Case Examples

1. Real-Time Customer Support

Challenge: In a high-volume customer support center that manages thousands of live chats, speed and accuracy are critical.

Solution: With CServe running on Snowflake’s GPU compute infrastructure, real-time answer extraction from a knowledge base becomes seamless. CServe’s optimized LLM serving ensures that support agents always have immediate access to accurate and relevant information, significantly reducing response times and improving the overall customer experience.

2. Batch Document Summarization for Legal Firms

Challenge: Legal firms often face the challenge of reviewing hundreds of documents for case preparation, a time-consuming and labor-intensive process.

Solution: By leveraging CServe on Snowflake, firms can automate document summarization at scale. CServe’s optimized LLM models, powered by GPU compute instances, efficiently summarize large batches of legal documents with high accuracy. This automation frees up valuable time for lawyers to focus on critical aspects of the case, enhancing productivity and case preparation efficiency.

3. Customer Feedback Analysis

Challenge: Consider a company that needs to analyze and summarize thousands of customer feedback documents in regular batch updates.

Solution: By utilizing CServe on Snowflake’s secure, GPU-accelerated infrastructure, the company can efficiently deploy LLMs to process and summarize feedback. This approach not only cuts down the time required for manual analysis but also ensures data privacy, as all processing occurs within the company’s Snowflake environment.

Deploying Your Application on SPCS

Snowflake Package Structure

Snowpark Container Services (SPCS) follows the Kubernetes paradigm and allows developers to run containerized applications on Snowflake-provided compute resources named Compute Pools.

Let’s quickly compare Kubernetes and SPCS terms:

| Kubernetes | SPCS |

| Node | Compute Pool |

| Deployment | Job/Service |

| Namespace | Database/Schema |

| Pod | Container |

| Image Registry | Image Repository |

To deploy your application on SPCS, you will need to:

- Push your Docker container image to the Snowflake image repository

- Create a long-running service or a job by providing required specifications, similar to Kubernetes

- Long-running service can be wrapped into a service function. This would allow you to use your application directly from SQL commands.

Next, you can convert your SPCS application into a Snowflake Native App using the Native App Framework. It helps you to initialize the project structure and pre-populate required project files.

Here is a great tutorial to get started. The main Native App files are:

- Setup script: An SQL script that runs automatically when a consumer installs an app in their account.

- Manifest file: A YAML file that contains basic configuration information about the app.

- Project definition file: A YAML file that contains information about the Snowflake objects that you want to create.

The CServe Native App was purposely built to allow Snowflake customers to install and run CServe LLM functions within their own Snowflake account.

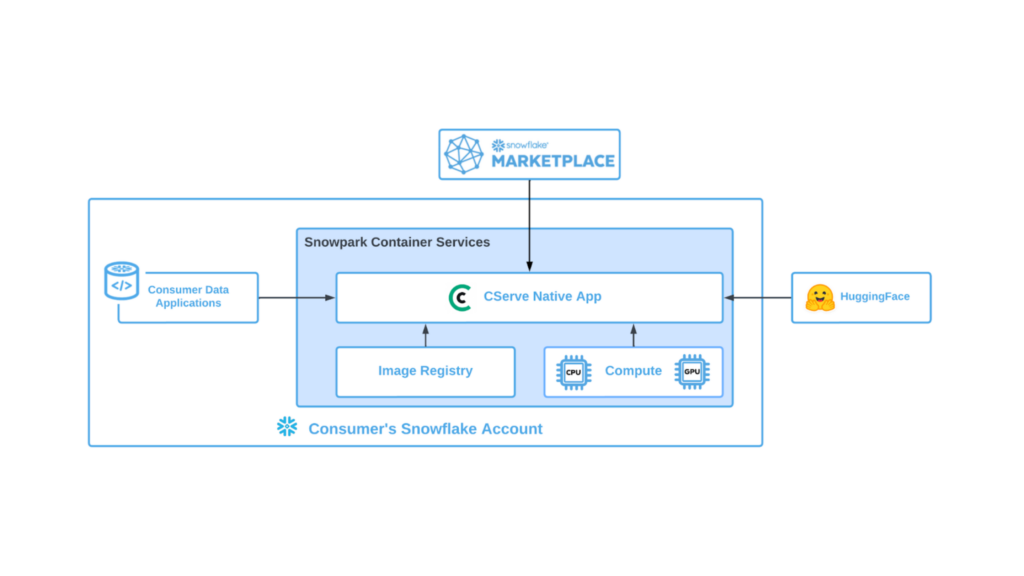

Here is a high-level overview of how the CServe Native App interacts with resources:

The consumer explicitly provides all required grants to the application and has full visibility on the compute resources usage.

All data processing is performed within the customer’s Snowflake account perimeter to keep the sensitive data secure.

How to Submit for Snowflake Marketplace Review

To make certain your application meets all Marketplace standards and requirements, Snowflake offers a great resource called ‘Provider Playbook,’ which provides all the information you need to provide applications.

Going Forward with CServe on Snowflake

With the seamless integration of CServe as a Snowflake Native App, enterprises can now harness the power of LLMs while leveraging Snowflake’s secure, scalable infrastructure.

Whether you’re implementing real-time AI applications or batch processing, CServe and Snowflake provide an ideal combination to bring cutting-edge AI to your organization.

Ready to supercharge your deployments? To learn more about how CentML can optimize your AI models, book a demo today.