GenAI company cuts training costs by 36% with CentML

A growing generative AI company partnered with CentML to accelerate their API-as-a-service and iterate with foundational models—all without using top-of-the-line NVIDIA GPUs like the A100.

The challenge

A growing generative AI company realized that their modern Large Language Models (LLMs) needed powerful GPUs for pre-training, fine-tuning, and inference. They used the Hugging Face Trainer to fine-tune models like Llama with 7B to 65B parameters in a parameter-efficient fine-tuning manner.

The company’s challenge was twofold:

- Getting A100s (high-end GPUs) was difficult, which made it hard to do fine-tuning jobs.

- Standard training on V100s (mid-range GPUs) had limited throughput.

Rather than wait for A100s, the company partnered with CentML. The CentML team has significantly improved the company’s ML workloads in terms of performance and cost-effectiveness by optimizing the use of V100 for advanced ML solutions.

The solution

We began our partnership by applying advanced memory optimization techniques to fit the company’s model on the more abundant and less expensive GPUs, like NVIDIA V100.

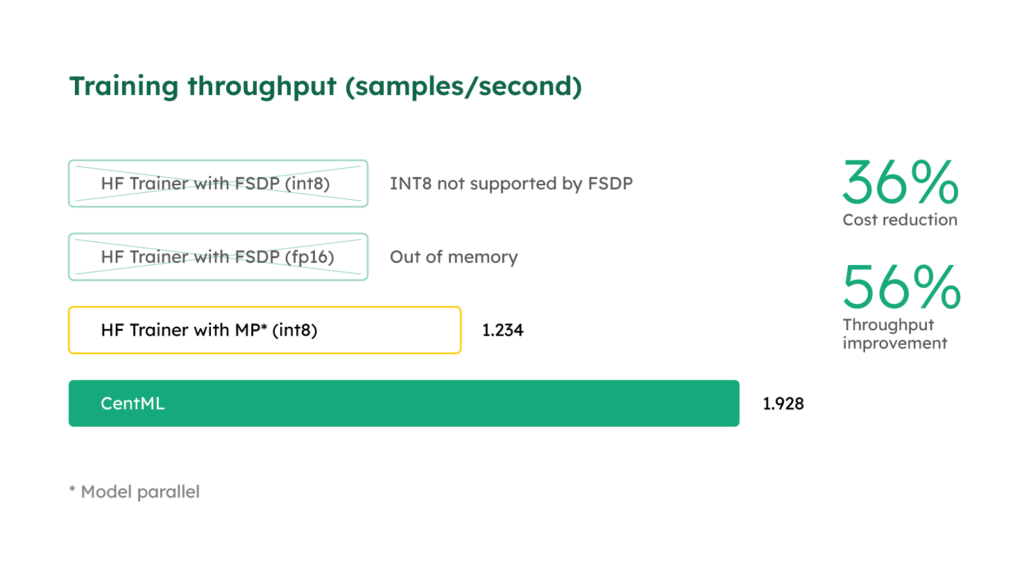

We then replaced the default Hugging Face Trainer data and model parallelism modules with DeepSpeed implementations and achieved a significant increase in the model throughput (1.92 samples per second) compared to the baseline of 1.23 samples per second (56% increase).

Today, the company can not only train faster but also train on-demand on more plentiful GPUs. This results in a hardware savings of nearly 36% and the ability to scale their model according to their plans.

The results

- 36% lower training cost

- 56% throughput improvement over the baseline implementation

Contact

Want to start optimizing your ML workflows? Contact us at sales@centml.ai or book a demo.

About CentML

CentML provides a complete solution for enterprises to develop and run AI models with great efficiency. We enable companies to stay ahead in AI innovation by speeding up API performance, improving model training capabilities, and delivering top-notch services to their customers while effectively managing their resources.

Share this