Insights

A Technical Deep Dive into Pipeline Parallel Inference with CentML

With yesterday’s release of Llama-3.1-405B, we’re excited to announce that CentML’s recent contribution to vLLM, adding pipeline parallel inference support, […]

How to profile a Hugging Face model with DeepView

Hugging Face has become a leading platform for natural language processing (NLP) and machine learning (ML) enthusiasts. It provides a […]

Introducing DeepView: Visualize your neural network performance

Optimize PyTorch neural networks, peak performance, and cost efficiency for your deep learning projects

Maximizing LLM training and inference efficiency using CentML on OCI

In partnership with CentML, Oracle has developed innovative solutions to meet the growing demand for high-performance NVIDIA GPUs for machine […]

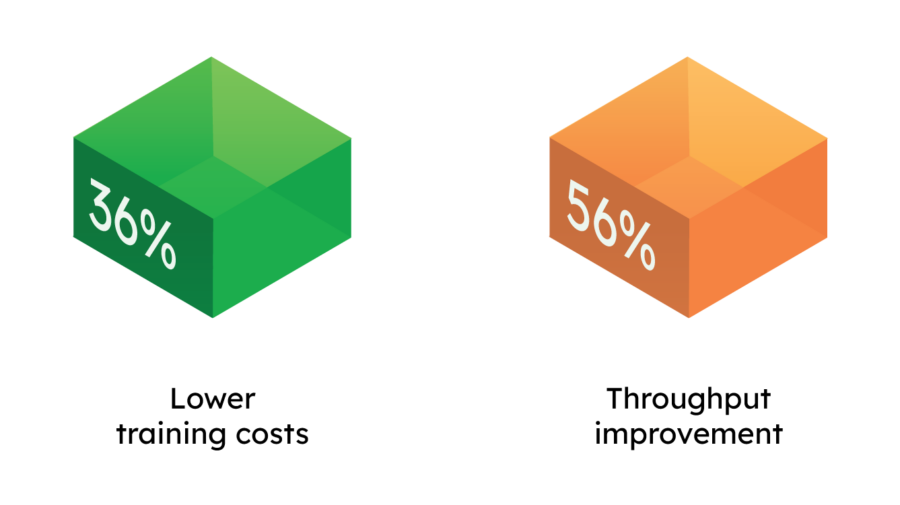

GenAI company cuts training costs by 36% with CentML

A growing generative AI company partnered with CentML to accelerate their API-as-a-service and iterate with foundational models—all without using top-of-the-line […]

Hardware Efficiency in the Era of LLM Deployments

How CServe can make LLM deployment easy, efficient and scalable